I really don’t want to add to the web3 debate (not least because I have skin in the game, advising a PR agency that works with DeFi firms), except to make some observations about its predecessor.

I feel on safer ground here because was there, I know what I saw: Web 2.0 wasn’t what most people think it is, or was. It means slightly different things to different people. But here in essence was how it evolved. I don’t claim to have intimate knowledge about how it went down, but I did have enough of a view as a WSJ technology columnist, to know some of the chronology and how some of those involved viewed it.

The key principles of Web 2.0, though never stated) were share; make things easy to use; encourage democratisation (of information, of participation, of feedback loops).

The key elements that made this possible were:

- tagging — make things easier to find and share (del.icio.us, for example, or Flickr);

- RSS — build protocols that make it easy for information to come to you (think blogs, but also think podcasts, early twitter. Also torrents, P2P)

- blogging tools — WordPress, blogger and Typepad, for example, that made it easy to create content that also makes it easy for people to share and comment on;

- wikis — make it easy for people to contribute knowledge, irrespective of background (Wikipedia the best known);

Some would disagree with this, but that is the problem with calling it Web 2.0. People weren’t sitting around saying ‘let’s build Web 2.0!’ They were just building stuff that was good; but gradually a sort of consensus emerged that welcomed tools and ideas that felt in line with the zeitgeist. And that zeitgeist, especially after the bursting of the dot com bubble in 2001, was: let’s not get hung up on producing dot.com companies or showing a bit of leg to VCs; let’s instead share what we can and figure it out as we go along. It’s noticeable that none of the above companies or organisations I mention above made a ton of money. In fact the likes of RSS and Wikipedia were built on standards that remain open source to this day.

Of course there were a lot of other things going on at the time, which all seemed important somehow, but which were not directly associated with Web 2.0: Hardware, like mobile phones, Palm Pilots and Treos; iPods. Communication standards: GPRS, 3G, Bluetooth, WiFi.

The important thing here is that we think of Web 2.0 also as Google, Apple, Amazon etc. But for most people they weren’t. Google was to some extent part of things because they built an awesome search engine that, importantly, had a very clean interface, didn’t cost anything, and worked far better than anything that had come before it. But that was generally regarded as a piece of plumbing, and Web 2.0 wasn’t interested in plumbing. The web was already there. What we wanted to do was to put information on top of it, to make that available to as many people as possible, and where possible to not demand payment for it. (And no, we didn’t call it user-generated content.)

Yes, they were idealistic times. Not many people at the heart of this movement were building things with monetisation in mind. Joshua Schachter built del.icio.us while an analyst at Morgan Stanley in 2003; it was one of the, if not the, first services which allowed users to add whatever tags they wanted — in this case to bookmarks. It’s hard to express just how transformational this felt: we were allowed to add words to something online that were helpful to us being able to find that bookmark again, irrespective of any formal system. Del.icio.us allowed us to do something else, too: to share those bookmarks with others, and to search other people’s bookmarks via tags.

It sounds underwhelming now, but back then it was something else. It democratised online services in a way that hadn’t been done before, by building a system that was implicitly recognising the value of individuals’ contributions. It wasn’t trying to be hierarchical — like, say, Yahoo!, which forced every site into some form of Dewey Decimal-like classification system. Tagging was inherently democratic and, as important, trusting of users’ ability and responsibility to help others. (For the best analysis of tagging, indeed anything about this period, read the legendary David Weinberger.)

Wikipedia had a similar mentality, built on the absurd (at the time) premise that if you give people the right tools, they can organise themselves into an institution that creates and curates content on a global scale. For the time (and still now, if you think about it) this was an outrageous, counterintuitive idea that seemed doomed to fail. But it didn’t; the one that did fail was an earlier model that relied on academics to contribute to those areas in which they were specialists. Only when the doors were flung open, and anybody could chip in, was something created.

This is the lesson of Web 2.0 writ large. For me Really Simple Syndication (RSS) is the prodigal son of Web 2.0; a standard, carved messily around competing versions, where any site creating content could automatically assemble and deliver that content to any device that wants it. No passwords, no signups, no abuse of privacy. This was huge: it suddenly allowed everyone — whether you were The New York Times or Joe Bloggs’s Blog on Bogs — to deliver content to interested parties in the same format, to be read in the same application (an RSS reader). Podcasts, and, although I can’t prove this, Twitter also used RSS to deliver content. Indeed, the whole way we ‘subscribe’ to things — think of following a group on Facebook or a person or list on Twitter — is rooted in the principles of RSS. In some ways RSS was a victim of its own success, because it was a powerful delivery mechanism, but had privacy baked in — users were never required to submit their personal information, saving them spam, for example. So the ideas of RSS were adopted by Social Media, but without the bits that put the controls in the hands of the user.

And then there were the blogging tools themselves. Yes, blogging predated the arrival of services like Blogger, Typepad and WordPress. But those tools, appearing from late 1999, helped make it truly democratic, requiring no HTML or FTP skills on the part of the user, and positively encouraging the free exchange of ideas and comments. There was a subsequent explosion in blogging, which in some ways was more instrumental in ending the news media’s business model than Google and Facebook were. We weren’t doing it because we were nudged by some algorithm. We wrote and interacted because we wanted to have conversations, a chance for people to share ideas, in text or voice.

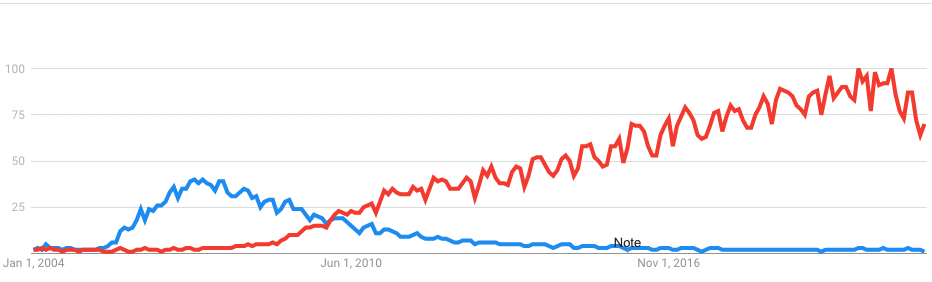

Web 2.0, then, started in 1999 with the first blogs, and was in steady decline by 2007, when VC money was pushing the likes of Social Media to scale in a way Web 2.0 hadn’t. Twitter was the first to the table, globally, as more ‘friendship’ oriented services like Friendster and MySpace, and later Facebook, slugged it out. By 2010 Google was dominant — it bought Blogger, boxed RSS into a corner with its Google Reader (which it subsequently canned), while Yahoo bought del.icio.us). And of course, there was the iPhone, and then the iPad, and by then the idea of mashing tools together to build a democratic (and largely desktop) universe was quietly forgotten as the content became the lure and we became the product.

Social Media is an industry; Web 2.0 was a movement of sorts. The writing was on the wall in 2005 when technical publisher Tim O’Reilly coined the term, which his company then trademarked. That didn’t end well for him.)

So what are the lessons to draw from this for web3? Well, one is to see there are two distinct historical threads: ‘Web 2.0’ and ‘Social Media’. To many of those involved there was a distinct shift from one to the other, and I’m not sure it was one many welcomed (hard though it was to see at the time.) So if web3 is a departure, it’s worth thinking about what it’s a departure from. The other lesson: don’t get too hung up in defining yourself against something: the greatest parts of ‘Web 2.0’ were just things that people came up with that were cool, were welcome, and gave rise to other great ideas. Yes, there were principles, but it’s not as if they were written in stone, or even defined as such. And while there was some discussion of protocols it was really about what material and functionality could be built on the existing infrastructure, which was still a largely static one (the first phones to have certified WiFi didn’t appear until 2004).

I do think there are huge opportunities to think differently about the internet, and I do think there’s a decent discussion going on about web3 that points to something fundamental changing. My only advice would be to not get too hung up about sealing the border between web3 and Web 2.0, because the border is not what most of us think it is — or even a border. And for those of us natives of Web 2.0, I think it’s worth not feeling offended or ok boomer-ed and to follow the discussions and development around web3. We may have more in common than you think.

I followed the same path you did (I’m 65, now!) and fully agree with your opinion : it’s all about the people’s desire.

Interesting. Still as an “old-timer” (yet 15 years younger than Forin commenting above), who sent his first international email in 1991, I would add Web 1.0 (or was it Web 0.0?), with its bulletin board system (BBS), discussion lists (using anonymized emails, of course), newsgroups (Usenet and otherwise), autoresponders … – almost all mentioned in this article had been already there and then, albeit known under other fancy names.

And yes, there were also sockpuppets, viruses, “evil” administrators, spam (the first ones sent in the 1970s), commerce, extortion, bomb threats, blackmail and black ops, spies (real ones) and much worse.

Thus: nihil novi now in the 2020s, apart from maybe more AI and more automated totalitarianism: “the weapons of maths destruction”.

Pingback: we’re out of good ideas: bring on winter – the loose wire blog